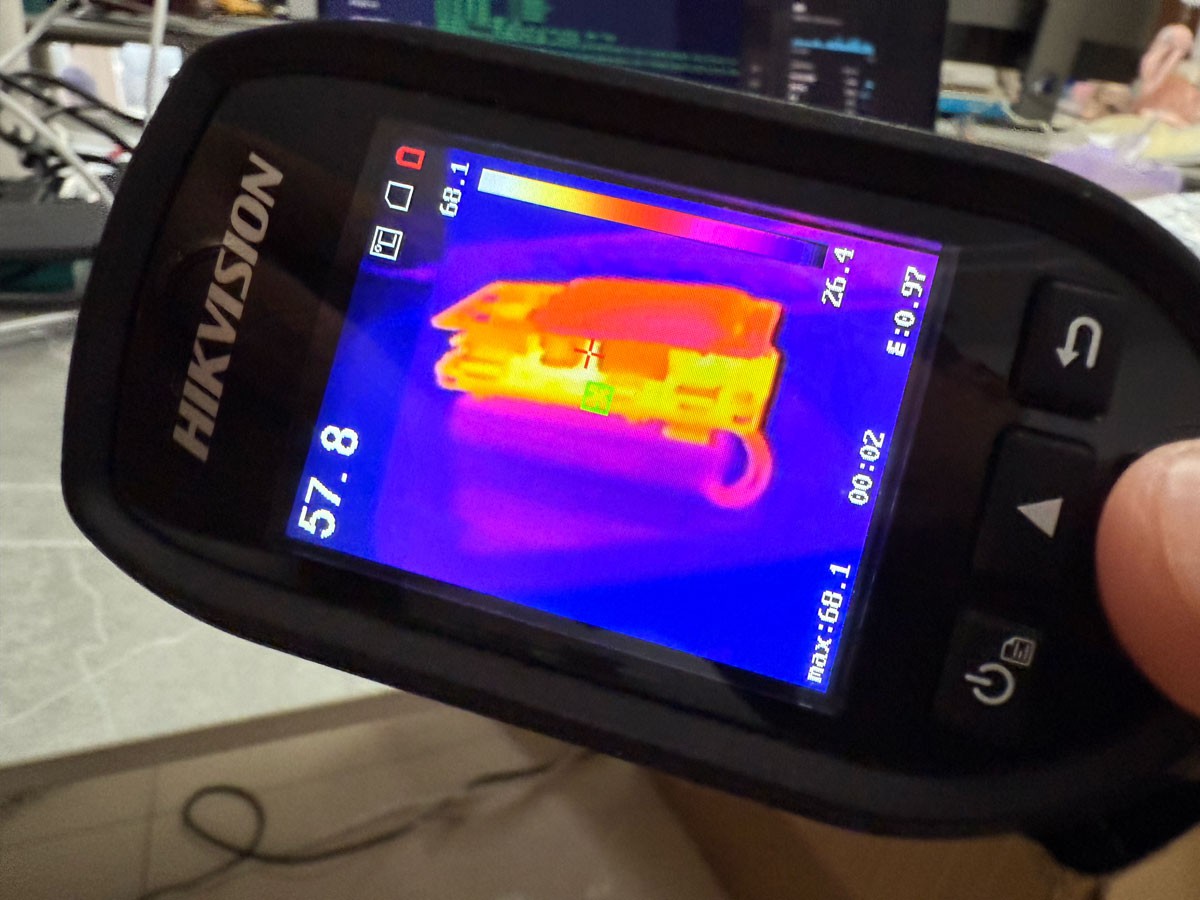

I tried running Ollama on the Zero 2W, and the output speed was actually quite decent. I also ran a chatbot program at the same time — while it did slow things down a bit, it shows that the Zero 2W does have potential! That said, the core temperature quickly rose to 68°C, so it’s clearly struggling a bit.

Install ollama following this tutorial: https://github.com/Gilzone/Installing-a-LLM-on-Raspberry-Pi-Zero-2-W

Jdaie

Jdaie

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.